“Some people call this artificial intelligence, but the reality is this technology will enhance us.

So instead of artificial intelligence, I think we’ll augment our intelligence.”

– Ginni Rometty

It’s really fascinating to know how AI has become a buzzword for this generation. From news tabloids to let’s say YouTube shorts, everywhere you click on, you will see one or the other podcast, blog or article consisting of the word AI in them.

AI is EVERYWHERE and it’s extremely POWERFUL!

You walk into a store, you will find AI-generated product recommendations made only for you and if you want to skip the line, coffee giants make it easy with their AI-enabled voice ordering. Place it beforehand, and you will have your coffee ready by the time you reach the store!

In fact, AI in Information Technology is booming like anything! As a matter of fact, it is one of the most talked about topics and we are here to discuss all the deets about it. So, if you have any ifs and buts about AI in your mind, jot it down now in your notebook! Because all your questions is sure to get an answer by reading this blog.

Overview: Importance of Artificial Intelligence in Information Technology

Did you know AI has been around for quite some time? It was actually termed back in 1955. That is almost 69 years from now by a brilliant math professor, John McCarthy at Dartmouth. It occurred over a seminal conference, stated in an Harvard article.

Coming back to AI in Information Technology, it is an abbreviation for Artificial Intelligence, a concept of simulation of human intelligence processes with the help of machines, particularly computers. In short, it mimics tasks that were earlier performed by humans. Some of the common AI applications include Natural Language Processing, Machine Learning, Deep Learning, Expert Systems, Speech Recognition, and more.

If we look into the usage of AI in IT, then right now AI applications are majorly used for:

- In Robotic Process Automation for Repetitive Tasks

- Decision-Making

- Virtual Assistants

- Combating Cyber Security Issues & more

Now, as you may be wondering what this blog will include. Well, this blog will delve into the role of AI in the IT industry. Along with that it will talk about the challenges faced by AI, Machine Learning, the benefits AI provides to the IT sector and how it has impacted the industry, use cases from worldwide industries, future trends, and predictions, and more.

What Are the Key Challenges Faced by the IT Industry & How AI Resolve Them?

Are you ready to explore some serious questions that have been floating around for some time now? Questions like “how AI has helped the IT industry? Is the IT industry ready for AI? Or what are the challenges that AI helped the IT industry in?

Is Machine Learning a part of AI? How is Machine Learning helping? What is the future of AI in the IT industry? How is the market of AI and Machine Learning? What is the impact of AI?

According to Forbes Advisor, ChatGPT which is known as the most talked about AI and we are sure our readers also have heard of it in the last couple of months, surpassed a massive 10 lakh users in just five days of its launch in November 2022. Reports have said that it has helped employees working in tech firms boost their productivity by 14% using AI tools. Hence it makes it clear that IT is definitely ready for AI.

That was about one of the tools of AI called generative AI. However, AI has helped the IT industry with many challenges. In fact, AI and Machine Learning go hand in hand, where Machine Learning has helped with strategic decision making for businesses. So, let’s learn about AI and Machine Learning and the difference before AI and after AI.

How AI is used in IT industry?

1. Data Management & Security

Before AI in IT:

Data management, service management, and data security are the most vital aspects of businesses. Why? Because it manages and handles sensitive data and protects it from hackers or potential threats.

Traditionally, data protection was manually, which relied on protocols and processes from the IT industry. Even though this helped in giving a degree of protection, there were still errors and struggle in the growing data volumes.

After AI in IT:

AI technologies has the power to handle large amounts of data. It identifies patterns and anomalies to provide real-time insights, hence helping with improving the data protection approaches.

2. Cybersecurity Threats

Before AI in IT:

In Cybersecurity, traditional techniques make use of signatures of indicators to find the threats. The thing with this approach is that it can encounter the previous threats. However it will have issues in finding the threats which have not been discovered.

After AI in IT:

You can include AI systems to improve the threat hunting process with the help of behavioural analysis or AI power threat intelligence. Here AI monitors network traffic, system logs to identify threats and activities that may hinder the security risks. We recommend that the best use of AI technologies in Cybersecurity is to combine traditional and AI together for best results.

3. Technological Advancements

Before AI in IT:

It was difficult to keep track of current trends and competitive market, it would take hours to manually research and adapt to new technologies. It would require experts to first study the market, understand and come up with their solutions.

After AI in IT:

Experts can make use of different types of AI technologies and tools like Machine Learning, Computer Vision, Deep Learning, NLP, and more to help them be competitive, it can analyze the market and provide you with a brief report stating what to do and how to do, so that you can focus on other things which require your full time.

4. Legacy System Integrations

Before AI in IT:

Legacy system’s integration is what IT firms use where it is the process of connecting older or on-premises systems to latest cloud-based digital technologies. Traditionally, IT firms faced challenges in integrating legacy systems with modern technologies, also hindering IT infrastructure updates.

After AI in IT:

When you integrate legacy systems with the help of Artificial Intelligence, it eases out the complications and makes seamless integrations and IT infrastructure updates.

5. HR Recruitment

Before AI in IT:

Before AI, recruitment was a time-consuming process. HR personnel had to manually screen resumes, schedule interviews, and onboard new hires. This often led to delays and inconsistencies in the hiring process. Similarly, performance reviews were subjective and conducted annually, limiting opportunities for ongoing feedback and hindering employee development.

After AI in IT:

AI has helped HR by doing the routine tasks. AI-powered recruitment tools can now scan resumes, identify qualified candidates based on specific criteria, and even schedule interviews in the hiring process. Performance management software allows for continuous feedback and goal setting. That’s why it can level up for a more developmental environment for employees.

Our Advice

By looking at all these challenges and how AI helped them to cope up. We can see a solid change that AI is making in the industry. However, if you ask about what is the future of AI in the IT industry?

Well, we can see that it will have major advancements. Right now, it still requires human intervention. In the future, AI might be able to do it all alone, leaving us to focus on other major works.

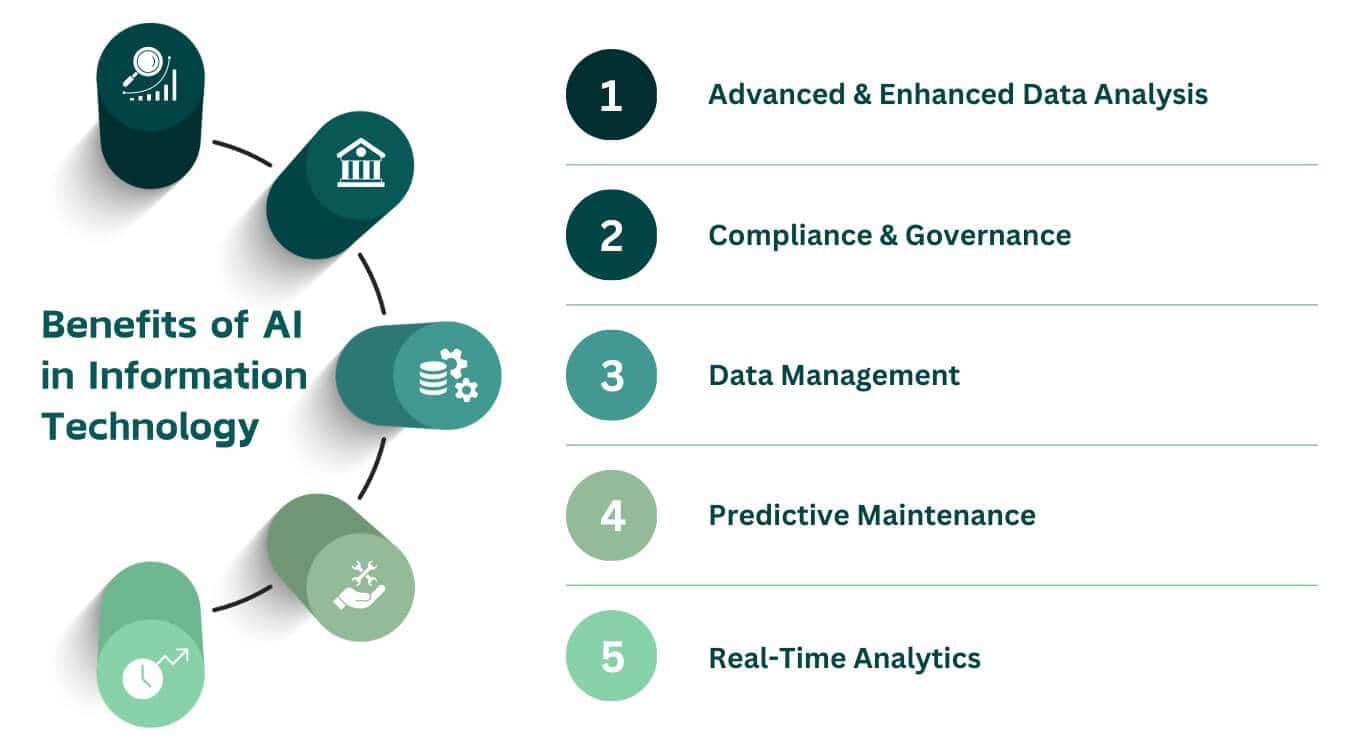

How AI is Benefiting the Information Technology?

AI is definitely benefiting the IT industry! In fact, the application of Artificial Intelligence in Information Technology has helped massively. We are sure even you must have seen the impact it has created so far. However, if you are curious to know then below, we have curated the best benefits of AI in IT:

- Powered Automation in Data Analysis

We all know how Automation plays a significant role in AI in IT operations management. You can see speed and accuracy at their best levels. AI can process huge data amounts and extract vital information needed by the firm. IT experts can then use AI for having a better understanding of system performance, user behaviour, market trends and strategic decision-making. - Compliance & Governance

With monitoring and auditing IT operations and processes, Artificial Intelligence can help to make sure that compliance is standard to industry regulations and follow the protocols of governance. AI helps by reducing risks in false positives, confirm that the procedures are actively followed and more.

- Data Management

AI techniques can help the IT industry find it easy to manage and utilize huge datasets efficiently by providing their help in data categorization, retrieval, classification and more.

- Predictive Maintenance

AI systems has the potential to see what lies in future. It means it can predict software and hardware failures before they occur with the help of data patterns. This leads to actively maintaining, reducing unplanned downtime and costs.

- Real-Time Analytics

AI in IT operations can help provide some real-time analytics on your user behaviour, system performance, software which thus leads to giving you access to more time where you can think about making strategic decision-making and response.

What Are the Major AI Use Cases in the IT industry?

AI systems has evolved and with time it has captured many departments of IT operations to help with productivity, efficiency and decision-making. Below, you will come across some of the most talked about use cases of all time in the IT operations.

a) Predictive Maintenance

Predictive maintenance is a concept of predicting when an equipment failure might occur and how you can prevent it by performing maintenance. Here AI helps to analyze data from sensors and other sources to identify when a hardware like servers, software, networking equipment, storage devices may find issues or failures.

You can schedule maintenance or replacements by understanding the issues, identifying warning signs early, preventing downtime and minimizing operational costs.

b) IT Support

You might have seen how difficult it becomes to handle user inquiries and technical issues. At times like this, Artificial Intelligence can really help the IT support and service team. How?

Through strong AI systems and software development like chatbots, or virtual assistants. That can manage ticketing systems, routine requests and enhance user satisfaction. By tackling such issues, it will enable you to work on other challenges and strategic initiatives.

c) Cybersecurity

As you may already know how big the role of cybersecurity is in the IT industry. Now let’s see the impact it has in cybersecurity. So, if you have a great development in AI, it can help.

It monitors network traffic, system logs, and user behaviour. Wherein it helps to identify anomalies and activities that might be causing issues. By going through these data in real-time, you can get to know the threats involved, malware, intrusion attempts that can go unnoticed with traditional security measures.

Artificial Intelligence will help you get trigger alerts. It will automate incident response actions, and block or isolate malicious activities or affected devices. It improves your cybersecurity and mitigate risks, reducing response time and safeguarding your data and resources from attacks.

d) Data Analytics

AI can help your business intelligence team to extract valuable information. It can do that from complex datasets which might take hours if done manually. It can help to find out trends, patterns and correlations that might be hidden in traditional mode. These collected data then help General Intelligence to drive decision-making across various business domains.

Plus, it also benefits in providing actionable strategies to run business services and improve efficiency. Artificial Intelligence can also help your enterprise with data visualization, uncovering detailed insights, automate report generation and more. In fact, with NLP, also known as Natural Language Processing, you can implement conversational style for users when they ask about data and receive insights, making data more accessible to non-technical individuals.

What Are the Future Trends & Forecast About AI in Information Technology?

As we have seen the potential that AI has in the IT industry and how it has impacted so far for various fields, it will be fascinating to see what it holds in the future. Everyone, including you and me, have our own ideas and perspectives surrounding Artificial Intelligence. Whether it would create ample opportunities or bring something extraordinary which we still have to see.

The opportunities are endless and here, we have curated some of the important trends and forecast from the internet and experts. Some of the major AI development will include:

-

-

- Increase in the number of chatbots and virtual assistants.

- You will get better recommendations in the product you purchase through Artificial Intelligence.

- Data privacy will be more secured with rigorous updates.

- Ethical considerations surrounding AI like bias, privacy and accountability because of the strong enhancements in Deep Learning.

- More smart applications surrounding AI and the rise of Meta workplace, Deep Learning or virtual reality.

-

Final Note:

You have made it till here. Great! Hence, we would like to end this blog on a thought-provoking question about AI in the IT industry so that when you go out there and if someone is having a conversation on Artificial Intelligence or its applications, even you can share your inputs with them and ask questions that can make their mind think. So, our question is, according to you, what do you think is going to be a major development in AI?

If you know the answer for the question above, then feel free to comment down below and we would love to see your responses and get to know what your thoughts into this whole AI concept are. Also, we would really be interested in having a conversation with you and your closed ones.

However, that was about our blog on AI in IT industry and if you would like to know more such informative blogs or have queries about our Artificial Intelligence based services you can call us today. Our experts are 24/7 ready to help you in any kind of AI development. Till then, you can enjoy reading this AI blog!