According to a study, more than 80% of enterprises are predicted to use generative AI application programming interfaces (APIs) or employ gen AI-enabled applications in operational environments by 2026. Growing need for faster product development, optimized processes, and enhanced customer experiences is estimated to augment the use of AI in the coming decade.

To understand why the adoption of AI solutions and prompt engineering is growing at an astonishing rate in organizations, we’ll have to understand what is prompt engineering, the best practices for writing prompts, its relevance in real-life applications, and future trends.

What is Prompt Engineering?

In simple words, prompt engineering is the process of creating inputs for gen AI tools that help to produce accurate responses. This is best explained with a real-world example of building a piece of furniture. The fundamentals of designing furniture include purchasing high-quality materials, following assembly instructions, and constructing the final product using the right tools.

Building generative AI models follows a similar practice where the prompt engineer designs inputs called prompts, that can be interpreted and understood by the model to produce optimal outputs. The practice of writing prompts is called prompt engineering.

The most thoughtful approach to derive the best outcomes from a gen AI model is to bridge the gap between raw queries and meaningful AI-generated responses. By fine-tuning effective prompts, engineers can design inputs that interact precisely with other inputs in a gen AI tool.

High-quality and knowledgeable prompts are crucial for the quality of AI-generated content such as text, images, code, or data summaries. Thorough inputs elicit suitable answers from AI models, such as those that generate code, synthesize text, and write email responses. Thus, high-quality prompt engineering reduces the need for manual evaluation and post-generation editing, enhancing user experience by saving time and effort.

What is Prompt Engineering in AI?

Prompt engineering in AI involves users guiding AI models to generate desired outputs. Generalized AI solutions need prompt engineering to define tasks, manage outputs, eliminate bias, and facilitate a platform for human-to-AI synergy.

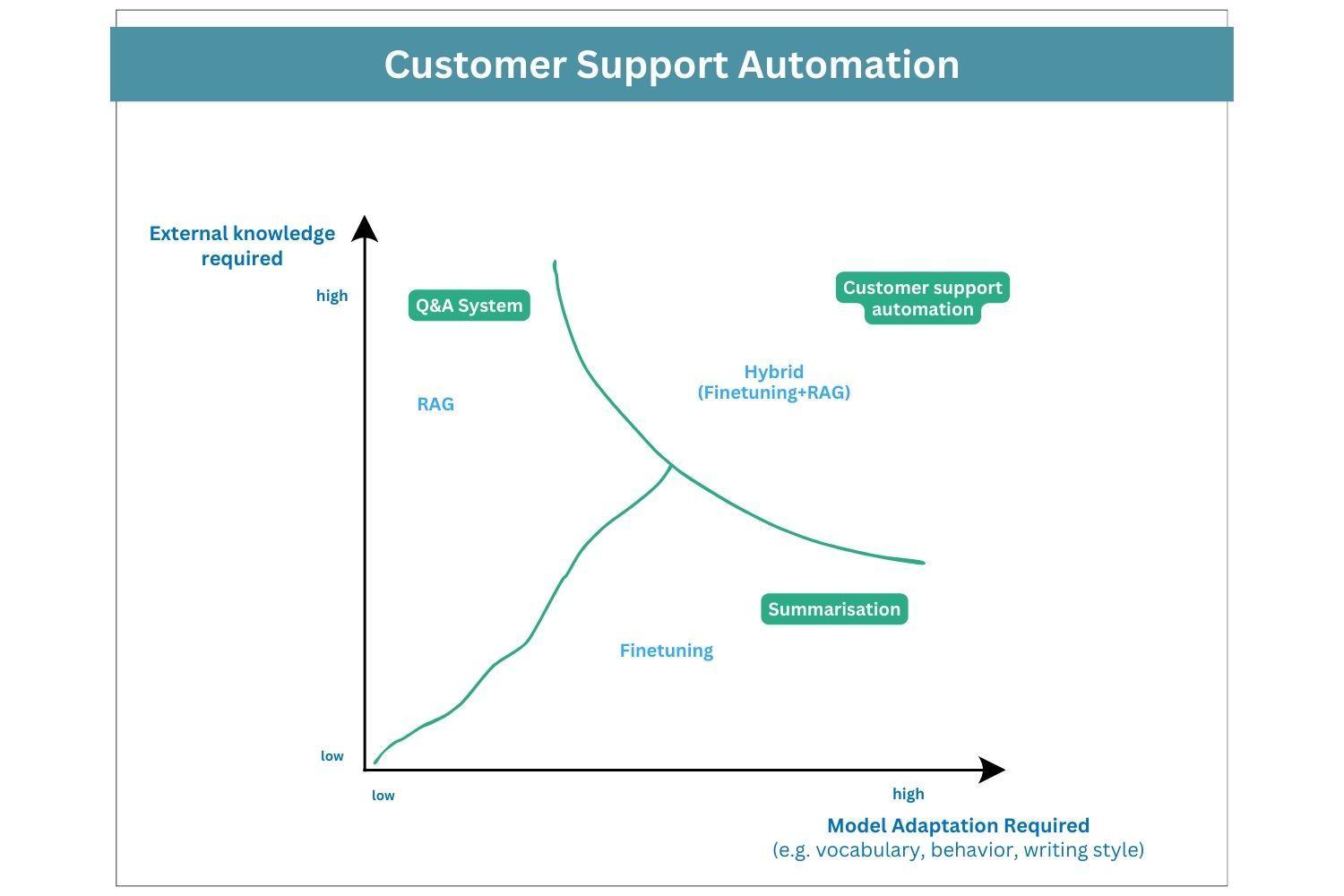

Gen AI is changing the way knowledge workers execute everyday tasks. Enterprises are using LLMs (Large Language Models) such as Gemini and ChatGPT to boost employee productivity. These enterprises are partnering with vendors to optimize LLM behavior using three essential methods: prompt engineering, retrieval-augmented generation (RAG), and fine-tuning.

In simple words, the RAG method exposes an LLM to real-world and accurate data sources instead of relying on internal language representation alone. This helps generate precise responses from AI models and is well-suited for enterprise use.

The figure below summarizes that when prompt engineering is combined with fine-tuning and the RAG method, enterprises can build highly potent gen AI solutions. For example, the customer support automation mentioned in the figure below results from developing hybrid solutions that combine prompt engineering, RAG, and fine-tuning.

Enterprises should collaborate with partners that deploy a holistic approach to building gen AI solutions.

Apart from LLMs, organizations are innovating AI agents that interact with humans and engage with their environment to collect data and interpret it to perform self-determined tasks according to predetermined goals. Autonomous LLM-based AI agents differ from other AI solutions as they’re capable of making rational decisions based on their interpretation and data to produce optimal responses.

How does Prompt Engineering Work?

The most optimum process of prompt engineering requires understanding user intent, fine-tuning, and improving prompts.

Here’s a step-by-step guide on how prompt engineering works:

- Assessing the problem: Understanding the problem depends on what you want your model to do and evaluating the task’s structure. Prompts are primarily crafted using three techniques: question-answering, text generation, and sentiment analysis to generate a response for the task.This involves understanding the type of information needed, such as subjective or analytical, identifying whether the output should be a story or article, and helping the AI model discern the sentiment from the text.

- Composing the initial prompt: Maintaining flexibility while crafting the initial input is important. This includes iterative refinement of the prompt to achieve the desired results.

- Analyzing the model’s output: This step helps to understand if the model’s response harmonizes with the task’s predetermined goal.

- Tuning and enhancing the prompt: This step underlines the purpose of prompt engineering, where users must refine the prompt’s language to communicate effectively with LLMs.

- Examining the prompt on multiple models: Testing the prompt can give you insights into its efficiency and help you apply it across different LLMs.

- Engineering for real-world applications: This final step gives an overview of the prompt’s transition from development to deployment and can be applied in several other applications.

Different Types of Prompt Engineering Techniques

Since LLMs are trained with massive amounts of data, users need to develop natural language processing (NLP) expertise to ensure the correct grammar and logic while crafting prompts.

Here’s a list of commonly used prompting techniques:

- Zero-Shot Prompting: In this technique, the LLM generates a response without specific training or prior context, while relying on the model’s pre-existing knowledge. This technique is ideal for generating responses to basic questions or general topics.

- One-Shot Prompting: This is a powerful strategy where the LLM is instructed to create a response based on a single example or template. This avoids overwhelming the model with multiple examples and works perfectly for specific tasks under a selected format.

- Few-Shot Prompting: This technique is a suitable example of generating accurate responses with minimum facts. This approach allows the AI model to perform a specific task after presenting only a few examples or templates.

- Self-Consistency with Chain of Thought (COT-SC): This advanced technique asks an LLM the same prompt several times and utilizes the responses to choose the most consistent answer.

- Tree of Thoughts Prompting: The chief aim of this approach is to solve complex reasoning tasks using testing and refining to provide an optimal solution.

How to Write Prompts and What are Examples of Prompt Engineering?

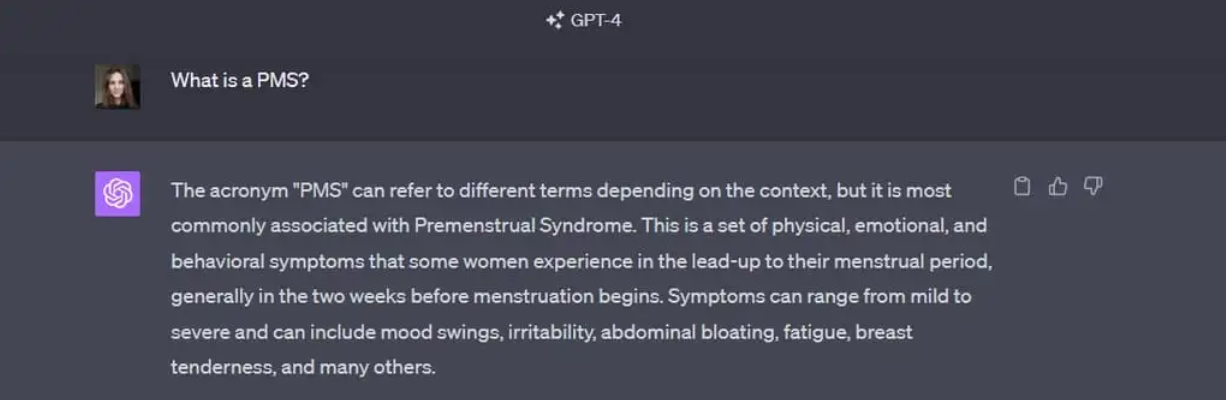

A well-engineered prompt delivers accurate and relevant responses. The screenshots below demonstrate how a carefully designed prompt can accelerate problem-solving and make learning models versatile and scalable.

Here’s an example of a prompt input in GPT-4 with unclear context that results in a generic response.

This shows that a poorly engineered prompt generates irrelevant responses and is not very useful in the business context.

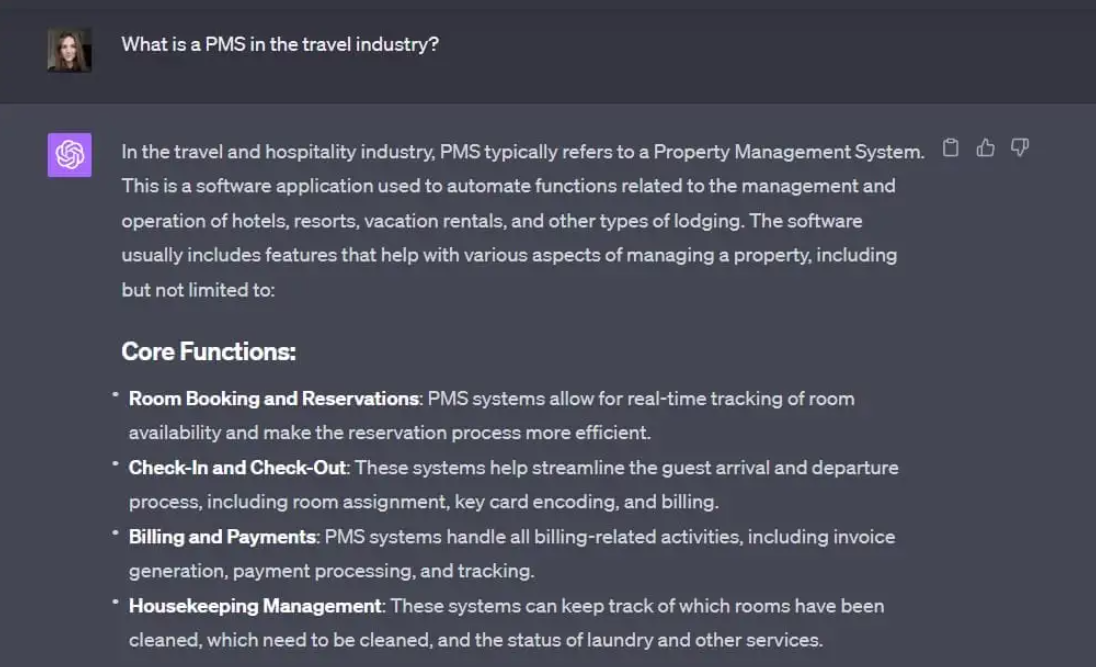

On the other hand, skillfully designed prompts help users to tailor the AI’s output to specific business needs.

It is evident that well-crafted prompts can be used across diverse business scenarios and help businesses tailor customer experiences.

Some of the prompt engineering examples are listed below:

- Text to Image Generation: Popular tools for this technology are DALL-E, Midjourney, and Adobe AI Image Generator. The concept of weighting is becoming prominent in these tools, owing to the need to emphasize specific parts of a prompt to generate the desired image.

- Question-Answering Systems: Q&A systems are a specific use case of LLMs that allow users to ask questions in web apps and receive immediate responses. These are easy-to-use systems that determine accurate answers using limited data from a user’s question.

- Chatbots and Virtual Assistants: AI chatbots and virtual assistants are being powered by vector databases to help AI models remember previous inputs, improve search, and enhance recommendations. These innovations are transforming the way businesses provide customer support and ensure customers get help for each query.

- Product Management: Skilled engineers are using gen AI prompts to outline a nine-month product roadmap for expanding a fintech platform, with a focus on incorporating AI for personalized customer experiences.

- Recommendation Systems: Increasing number of shoppers are becoming repeat customers owing to tailored customer experiences. These experiences are amplified using recommendation systems where engineers can provide the AI model with the shopper’s demographic details and product preferences and generate recommendations based on the customer’s purchase history.

What are the Skills Needed to be a Prompt Engineer?

GenAI is revolutionizing enterprise operations. Top leaders are witnessing 50% to 90% productivity improvements across industry use cases. Professionals with academic backgrounds in computer science or linguistics have a higher chance of being hired at firms to understand and optimize language models.

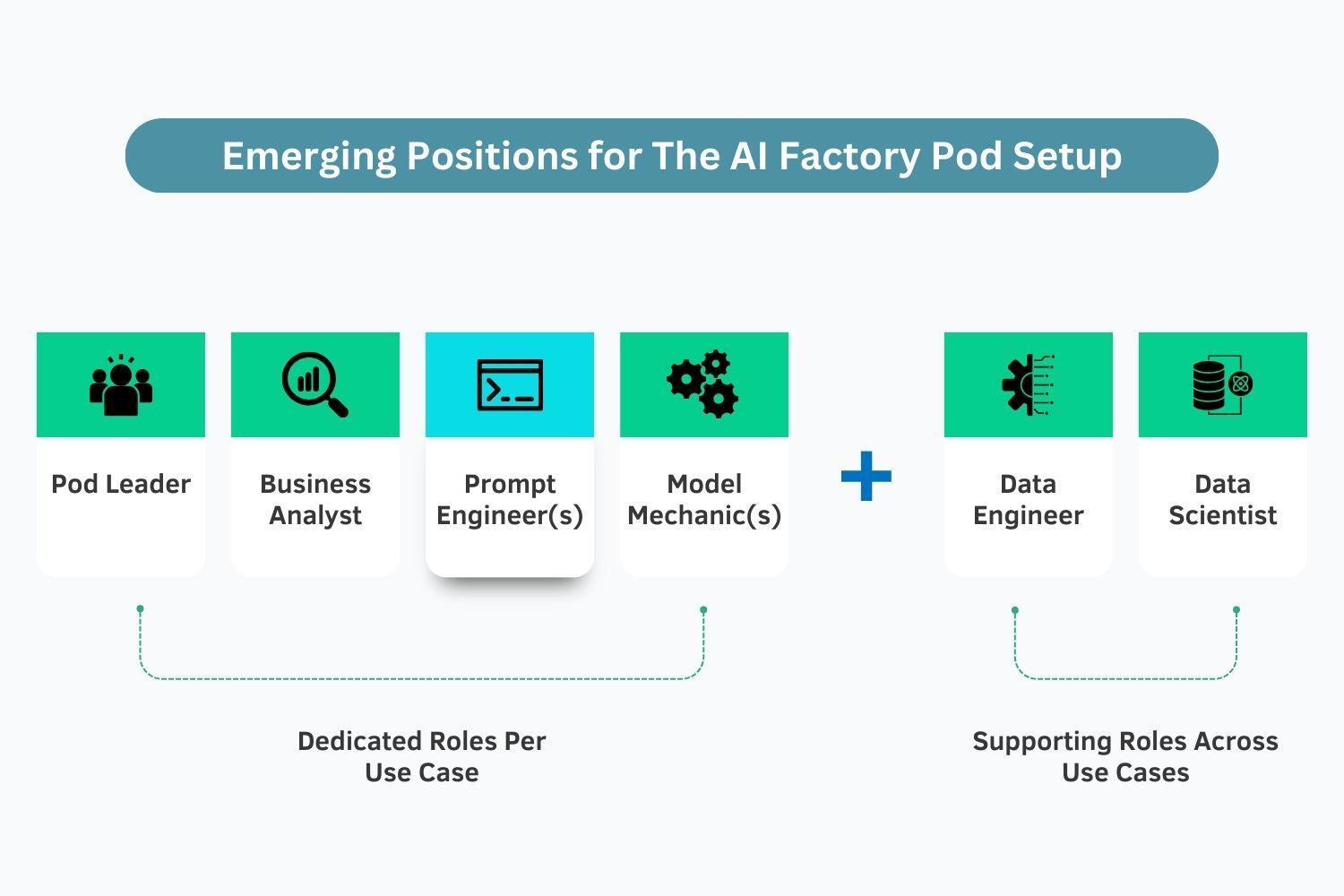

Like in this figure mentioned below, apart from business analysts and data scientists, organizations are now hiring prompt engineers to design and fine-tune prompts to meet the objectives of specific use cases.

To be proficient in prompt engineering, here’s a list of skills required to interact with LLMs and produce contextually relevant responses efficiently:

- Deep Knowledge in AI, ML and NLP: As a prompt engineer, it is vital to understand the different variants of LLMs and their use cases. Highly skilled prompt engineers gain an understanding of the internal working frameworks of AI models and familiarize themselves with the limitations of AI, ML, and NLP for problem-solving.

- Programming Skills: Use cases like chatbots and embedding AI prompts in software make it imperative for engineers to learn programming languages.

- Proficiency in Defining Clear Problem Statements: To achieve the desired results, use unique details such as defining target audiences and clearly identifying what responses to expect from language models.

- Creative Interaction with AI: A thoughtful approach to creativity while interacting with LLMs is experimenting and trying different modulations to obtain satisfactory results.

- Data Analytics and Reporting Skills: Engineers must use data analytics and reporting to evaluate the correctness, completeness, and consistency of outputs.

- Transformers: Apart from NLP and analytics, engineers are gaining knowledge about transformers, which are a type of deep neural networks designed for managing sequential data like language. They are the foundation for LLMs and help to understand the contextual relationships between words in a sentence.

Best Practices for Writing Prompts

Designing the right prompts is an iterative process that requires several experiments to produce the desired responses.

These best practices help users to be specific and focus on the details that result in suitable outcomes from LLMs.

- Be Clear and Specific: A defined prompt minimizes ambiguity and allows LLMs to clearly understand the request’s context.

- Use Relevant Keywords: Relevant keywords act like magic words for crafting prompts, especially while feeding special instructions in an LLM to produce relevant results.

- Establish Context: Providing background information on AI models, such as scope, subject matter, and constraints, helps generate the specific response you’re aiming for.

- Refine the Prompt: Refining involves studying how the LLM responds to various prompts. An effective approach is to actively give AI applications feedback on the relevancy of the output produced.

- Experiment with Different Prompts: Trying out different techniques allows users to apply prompts to several applications, contexts, and use cases.

Benefits of Prompt Engineering

Prompt engineering helps to create prompts that communicate the query to the AI model to generate optimized results.

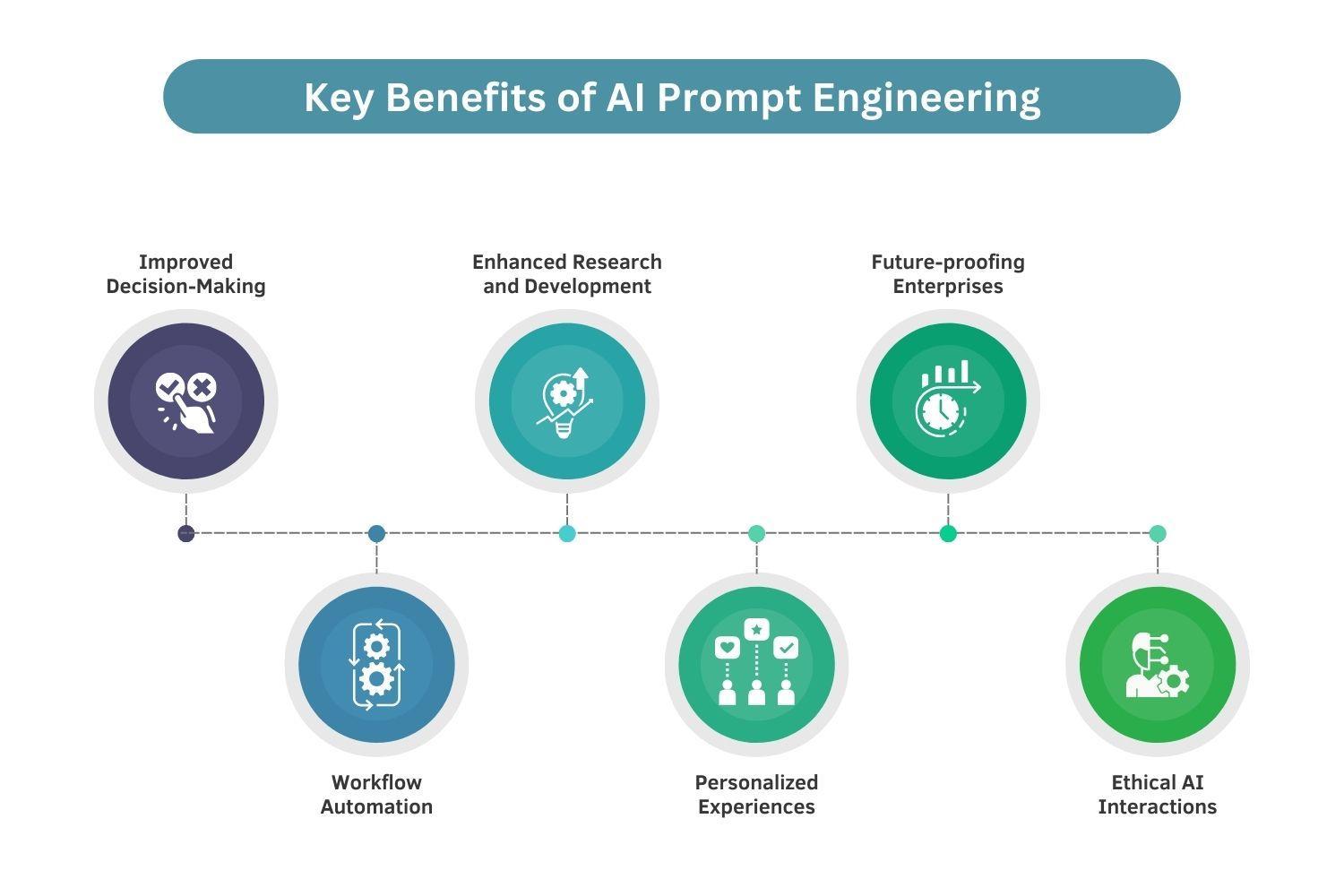

These benefits of prompt engineering are grabbing the attention of enterprise leaders who are adopting various strategies to improve their interactions with AI models:

- Improved Decision-Making: By perfecting prompts, organizations can acquire relevant information to gain competitive advantage and enable strategic business growth.

- Workflow Automation: Prompt engineers guide language models to generate code snippets and even entire programs using clear and specific instructions. This functionality enhances software development and automation processes.

- Enhanced Research and Development: By adopting optimal strategies, enterprises can emerge as technology leaders and adjust to evolving customer demands.

- Personalized Experiences: Since delivering exceptional customer experiences is a top priority for businesses, leaders and data teams can create AI models that facilitate personalized recommendations and tailored interactions with customers.

- Ethical AI Interactions: Enterprises are developing responsible AI solutions to address biases and promote fairness within AI systems.

- Future-Proofing Enterprises: By fine-tuning prompts, businesses can streamline AI processes, thus optimizing resource utilization and reducing costs.

Prompt Engineering Use Cases

Prompt engineering empowers organizations to leverage AI systems and tools to stay competitive in the rapidly evolving connected world.

Here is a list of use cases that businesses are harnessing to make data-driven decisions, improve customer experiences, and streamline operations.

- Code Generation: Prompt engineering is being deployed in code generation tools like Open AI Codex and GitHub Copilot, which assist developers with game, web, and data applications.

- Customer Support Automation: Customer-care-based prompts are helping enterprises provide exceptional customer experiences. Teams are leveraging ChatGPT to design prompts that enhance customer support, provide personalized assistance, and manage complaints.

- Content Generation: As one of the primary functions of generative AI tools, tailored prompts help in drafting social media posts, writing articles, and generating product descriptions.

- Critical Thinking: Critical thinking applications require AI models to solve complex tasks. This involves analyzing information from different nuances, examining its accuracy, and making logical reasons. To achieve this, prompt engineers enhance the model’s data analysis capabilities.

- Data Analysis and Summarization: Financial reporting is one of the primary examples of data analysis and summarization. Using targeted prompts in AI tools, analysts can summarize reports, balance sheets, profit and loss statements, etc. to quickly extract market signals from the content.

What is the Future of Prompt Engineering?

The global prompt engineering market size was valued at USD 222.1 million in 2023 and is estimated to advance at a compound annual growth rate of 32.8% between 2024 to 2023. This proves that prompt engineering is more than just NLP. It’s an important tool for adjusting and improving language model behavior. With thoughtful design approaches and innovative methods, users can leverage the capabilities of these language models and explore new opportunities in natural language processing.

As AI models become more and more complex, prompt engineers will act as the bridge for effective communication with learning models, making LLMs accessible and user-friendly for individuals and enterprises. From healthcare to entertainment, there’s a growing demand for AI democratization for people without technical expertise. Thus, prompt engineering will play an invaluable role to help users interact with AI models for designing user-friendly prompts, assist in creating intuitive interfaces, and ensure AI helps in expanding human capabilities.

Partner With AIT Global Inc. to Transform Your AI Capabilities with Prompt Engineering

Prompt engineering is crucial for tasks like data analysis, generating useful outputs, and information retrieval. With industry-wide experience in developing custom AI solutions and diversified AI-ML skills, our team of professionals help enterprises craft tailored instructions for language models to generate meaningful outputs.

Using cutting-edge platforms like OpenAI, TensorFlow, and PyTorch, we deploy our AI & ML solutions to help clients make informed decisions and achieve business growth. Reach out to us for employing strategic and innovative approaches to guarantee exceptional results for Artificial Intelligence & Machine Learning projects. If you’re impressed with our takeaways in this blog, then spread the knowledge with your connections and check our latest blog on GenAI to understand its significance in business.

What is XBRL: Learn the Definition, its Benefits, and Trends

What is XBRL: Learn the Definition, its Benefits, and Trends  Agentic AI vs Generative AI: What’s the Difference and Why It Matters

Agentic AI vs Generative AI: What’s the Difference and Why It Matters  The Hidden Costs of Incorrect EDGAR Filing and XBRL Tagging – And How to Avoid Them

The Hidden Costs of Incorrect EDGAR Filing and XBRL Tagging – And How to Avoid Them  What is Agentic AI and How It’s Transforming Business Operations

What is Agentic AI and How It’s Transforming Business Operations  AI Tools For Small Businesses

AI Tools For Small Businesses